Introduction

In this project, I developed a Facial Expression Recognition (FER) system capable of classifying Ekman's seven universal emotions — anger, disgust, fear, happiness, sadness, surprise, and neutrality — by training a custom deep learning model on a high-quality, carefully curated dataset.

The project blends classic and modern FER insights, aiming to make emotion recognition more accurate and accessible even with limited hardware.

The Challenge

Most state-of-the-art FER models are trained on constrained datasets or require high-end resources to achieve competitive accuracy.

Expression recognition degrades under real-world conditions — such as varied lighting, face coverings or subtle expressions.

The Solution

A deep learning-based FER system trained on a hybrid dataset combining real, synthetic, and web-sourced images.

The model architecture is based on a fine-tuned ResNet-18 with custom training strategies such as cross-domain data curation. This approach offers a balanced tradeoff between performance and resource efficiency.

Process

The approach followed four essential phases:

01

Problem Framing

Defined the classification goal (7 Ekman emotions), established hardware constraints, and set performance targets.

02

Dataset Development

Curated and manually reviewed 23,450 high-quality images from multiple datasets and sources, ensuring emotion accuracy and consistency.

03

Model Training

Implemented a ResNet-18 architecture with data preprocessing, cosine learning rate scheduling, and reproducible training routines.

04

Evaluation & Benchmarking

Achieved 90% accuracy and 90% F1-score. Compared results with state-of-the-art models from recent FER literature.

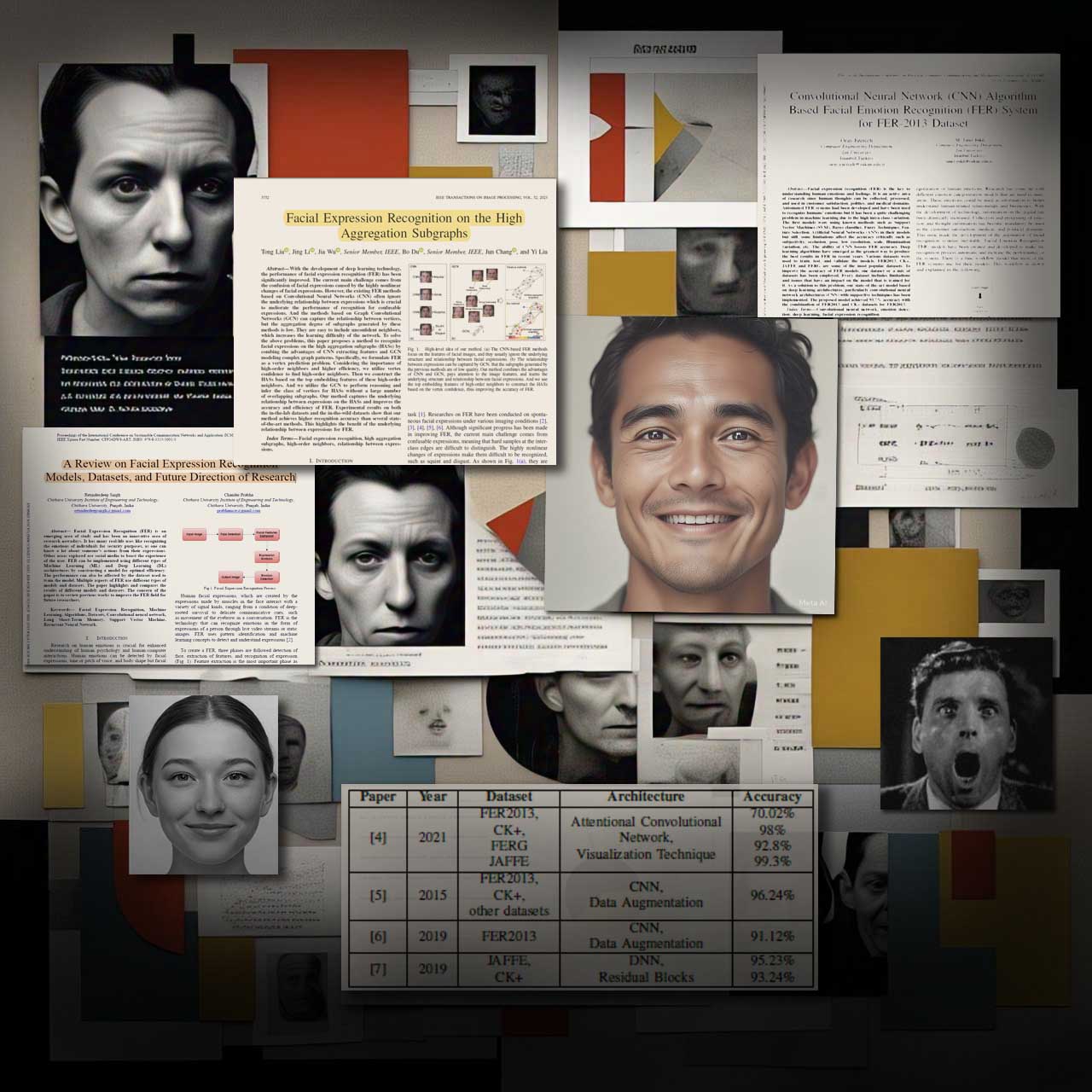

State of the Art

A review of recent research highlights key trade-offs in FER model design:

Power = Precision

RRN + TST tops the charts (100% on CK+) — but demands serious computing power.

Smart & Slim

MobileNetV2 + ResNet-18 delivers solid results (up to 86%) — perfect for limited hardware.

Transformers Scale

ViT-based models generalize well but require more VRAM and longer training times.

SVMs Fall Behind

Lightweight and simple, but classical SVMs struggle to keep up with deep learning models.

Theoretical Framework

The project is rooted in:

- Ekman's theory of universal emotions (seven core emotions)

- Convolutional Neural Networks (CNNs) for spatial feature extraction

- Transfer Learning and Fine-tunin

- Cross-domain learning through dataset hybridization and augmentation

Development and Methodology

Dataset Development:

- 23,450 manually reviewed images across seven emotion classes.

- Sources: FER-2013, AffectNet, Oulu-CASIA, MMI, web scraping, Meta & Gemini-generated faces.

Architecture Proposed:

- ResNet-18 pretrained on ImageNet.

- Custom training loop with cosine LR scheduling and optional augmentations.

Resources Used:

- Intel Core i7 (10th Gen)

- NVIDIA GTX 1650 Ti (4GB VRAM)

- Python, PyTorch, OpenCV, Albumentations

Results

90%

Overall accuracy across 3,521 validation samples.

0.90 F1

Highest-scoring classes: Happy and Surprise.

+4%↑

Outperformed average ResNet-18 FER models (typically ~86%) on FER2013-like datasets.

According to Singh & Prabha (2024), FER models using MobileNetV2 and ResNet-18 typically achieve between 82.1% and 86% accuracy on datasets like FER2013. This project’s model, based on a refined ResNet-18 architecture, achieved 90% — exceeding the upper end of this range by ~4%.

Next Steps

- Add emotion intensity and arousal-valence labeling.

- Extend training to temporal data (videos) using LSTM or 3D CNNs.

- Deploy the model in a lightweight desktop app or mobile tool for psychology, education, or HCI applications.

- Evaluate performance on datasets like VFEM or AffWild2 for deeper mental health analysis integration.